Crosschq Blog

Prompt Engineer Interview Questions

Why Hiring the Right Prompt Engineer Drives Competitive Advantage Through AI

Prompt Engineers are the craftspeople who unlock the full potential of large language models by designing, testing, and optimizing prompts that produce reliable, high-quality outputs at scale. They bridge the gap between AI capabilities and business requirements, transforming vague user needs into precise instructions that guide LLMs to generate valuable results. Unlike traditional engineers who write code, Prompt Engineers write natural language instructions that serve as the programming layer for generative AI systems.

A structured interview approach helps you assess candidates on critical competencies like prompt design methodology, model behavior understanding, iterative optimization techniques, safety considerations, and cross-functional collaboration with product teams, engineers, and subject matter experts.

Best Interview Questions to Assess a Prompt Engineer Candidate

Behavioral Questions

Tell me about a time you significantly improved a prompt's performance through iterative refinement. What was your process and what metrics did you track? Describe a situation where a prompt produced inconsistent or unexpected outputs. How did you diagnose the issue and achieve more reliable results? Share an example of when you had to balance multiple competing objectives in a prompt (like accuracy, conciseness, and tone). How did you make trade-offs? Give me an example of a time you identified and mitigated bias or safety issues in AI-generated content. What was your approach? Can you tell me about a time you had to explain prompt engineering best practices to non-technical stakeholders or help them write better prompts?

Situational Interview Questions

If a prompt works well with GPT-4 but fails with Claude or other models, how would you approach making it more model-agnostic? How would you handle a request to create prompts for a sensitive use case where harmful outputs could have serious consequences? What would you do if stakeholders want immediate results but you know the prompts need more testing and refinement? If you discover that your carefully crafted prompts are being bypassed by users writing their own, how would you address this? How would you approach building a prompt library for a team when there are no existing standards or documentation?

Prompt Design and Optimization Questions

Walk me through your process for designing a prompt from scratch for a new use case. What steps do you take? How do you approach few-shot learning versus zero-shot prompting? When would you use each technique? Describe your strategy for implementing chain-of-thought reasoning in prompts. What problems does it solve? What's your approach to prompt versioning and A/B testing different prompt variations? How do you handle context window limitations when you need to provide extensive background information?

Technical Assessment Questions

LLM Behavior and Theory

Explain how temperature and top-p sampling affect model outputs and when you would adjust these parameters. What are the key differences in prompting strategies between different model families (GPT, Claude, Llama, etc.)? How do you handle hallucinations and ensure factual accuracy in LLM outputs? Describe the role of system messages versus user messages and how you leverage each effectively. What's your understanding of how tokenization affects prompt design and cost optimization?

Production and Integration

How do you ensure prompt reliability and consistency when deploying to production at scale? Describe your experience with prompt injection attacks and defense strategies. What's your approach to monitoring and evaluating prompt performance over time? How do you balance prompt complexity with API costs and latency requirements? Walk me through how you would build a structured output system using prompts (like generating valid JSON or following specific formats).

How to Use These Questions in a Structured Interview

Prompt Engineering roles require both creative language skills and systematic testing methodologies. Structured interviews help hiring teams consistently evaluate candidates across prompt crafting ability, model understanding, optimization experience, and safety awareness—ensuring you find engineers who can design prompts that deliver consistent, valuable results.

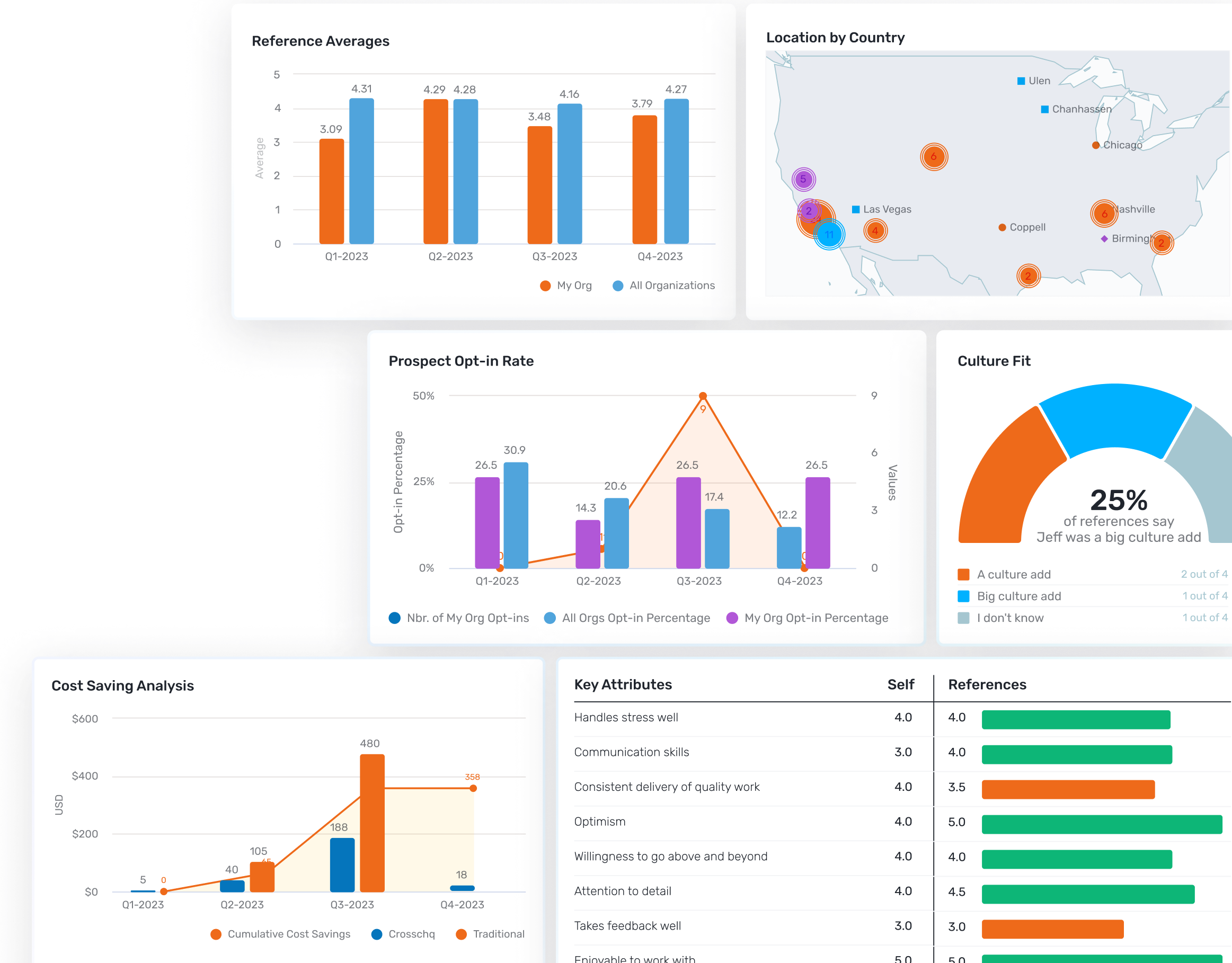

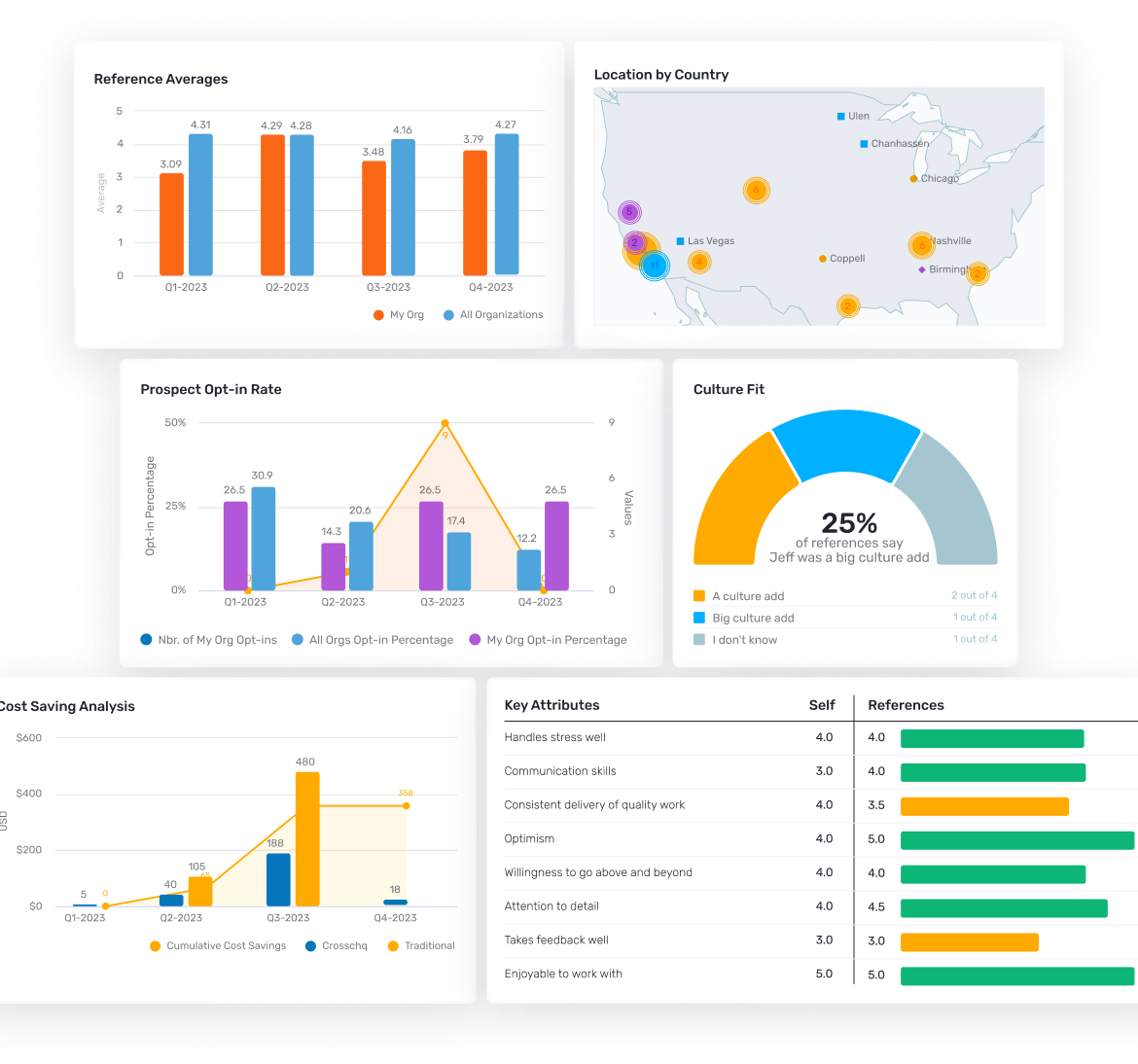

With Crosschq's AI Interview Agent, hiring managers can streamline structured interviews, standardize scoring, and make data-informed hiring decisions for critical Prompt Engineering roles that maximize your AI investment.

Prompt Engineer FAQs

How many interview rounds are typical for a Prompt Engineer role?

Usually 3–5 rounds, including initial screening, prompt design practical assessment, prompt optimization challenge, technical discussion about LLMs, and cultural fit assessment with product and engineering teams.

Why use structured interviews for Prompt Engineer hiring?

They ensure comprehensive evaluation across prompt design creativity, systematic optimization skills, model knowledge, and safety considerations while maintaining consistency across candidates with diverse backgrounds in linguistics, UX writing, technical writing, or software engineering.

What defines a successful Prompt Engineer?

Ability to craft effective prompts that consistently produce desired outputs, systematically test and optimize prompt performance, understand model capabilities and limitations, implement safety guardrails, document best practices, and collaborate effectively with product teams and engineers.

What's the difference between a Prompt Engineer and an AI Engineer?

Prompt Engineers focus on designing natural language instructions to guide LLM behavior, while AI Engineers build the underlying systems, APIs, and infrastructure that power AI applications. Prompt Engineers typically have stronger linguistic and UX skills, while AI Engineers have deeper programming and systems knowledge.

Take the Guesswork

Out of Hiring

Schedule a demo now

%20-200x43.png)